Why AI pilots fail and how DevOps turns experiments into real value

AI & Modern Engineering Practices

What you’ll find in this article:

Why 95% of GenAI pilots fail, according to MIT research cited by Forbes;

The real, non-obvious causes behind failed AI initiatives;

Why avoiding operational friction undermines AI adoption;

How DevOps bridges the gap between AI experiments and production reality;

Why AI complements engineers instead of replacing them;

How automation with context creates predictable, defensible ROI.

Why read this article: if you are a CTO, tech leader, or founder under pressure to “do something with AI,” this article will help you separate durable strategy from short-lived hype. Instead of abstract promises, you will gain a grounded perspective on how DevOps enables AI to deliver consistent, auditable, and economically sound results, even when most pilots fail.

Why so many AI pilots fail before reaching production

Artificial intelligence has become one of the most discussed topics in executive meetings, boardrooms, and engineering planning sessions. For many organizations, AI pilots are launched under pressure to signal innovation, often before operational readiness is clearly defined. In this article, AI pilots refers to early-stage AI initiatives or proofs of concept designed to test feasibility, value, or performance before broader adoption.

Yet despite massive enthusiasm, reality paints a far less optimistic picture. Recent MIT research, referenced by Forbes, reveals that approximately 95% of GenAI pilots never reach sustained production or measurable business impact. These initiatives are rarely shut down formally. Most simply stall, degrade, or become too fragile to trust, eventually fading into irrelevance.

Contrary to popular belief, the main reason behind these failures is not model quality, hallucinations, or vendor immaturity. While those issues exist, they are rarely decisive on their own. The real failure happens earlier, at the structural and operational level.

Most organizations treat AI as an add-on rather than as an operational capability embedded into real systems. As a result, pilots succeed in isolation but collapse under real-world pressure, where security, reliability, cost, and governance matter.

The illusion of progress: why AI demos don’t survive production

AI pilots often look successful because they are designed to avoid friction. They rely on clean datasets, simplified workflows, and controlled conditions. This creates the illusion of progress while masking deeper weaknesses.

Once these systems interact with live environments: real users, real infrastructure, real compliance requirements, their limitations surface quickly. Pipelines break. Costs escalate unpredictably. Outputs become inconsistent. Trust erodes, both technically and organizationally.

MIT’s findings highlight an uncomfortable truth: the 5% of AI initiatives that succeed do not aim for sweeping transformation. Instead, they focus on automating specific, repetitive tasks already embedded inside operational workflows. In other words, success comes from precision, not ambition.

This insight reframes AI success as an operational challenge, not a model-selection problem.

Why avoiding friction is the fastest path to failure

One of the most counterintuitive conclusions from the MIT study is that companies often fail precisely because they try to eliminate friction altogether. They choose generic tools because they are easy to deploy. They prioritize speed of adoption over clarity of integration. They value impressive demos more than operational rigor.

However, friction is not the enemy. Unmanaged friction is.

Operational friction exists because real systems are complex. They involve dependencies, security boundaries, historical behavior, and business constraints. When AI ignores this context, it produces results that appear correct but are operationally unsafe.

This is why so many pilots remain stuck in what could be called demo purgatory: impressive enough to showcase, but not trustworthy enough to operate.

DevOps exists to manage friction by making complexity observable, governable, and repeatable.

DevOps as the operational backbone of AI

DevOps has always been about more than tooling or deployment speed. At its core, it is a discipline that emphasizes repeatability, observability, accountability, and controlled change. These principles become indispensable when AI enters production environments.

AI systems are dynamic by nature. They evolve over time, adapt to new inputs, and interact with systems that are themselves constantly changing. Without robust pipelines, monitoring, and governance, this dynamism quickly becomes a liability.

DevOps provides the structure that allows AI to operate reliably over time. Through infrastructure as code, CI/CD pipelines, automated testing, and continuous observability, teams gain visibility into how AI behaves not only at launch, but continuously.

Practical examples include versioned model deployments, automated rollback mechanisms, cost monitoring tied to usage, and audit logs for AI-triggered actions.

Why replacing engineers was never the real objective

The idea that AI will replace developers dominates headlines, but it rarely reflects the concerns of experienced engineering leaders. The real fear is not workforce displacement; it is loss of control.

Leaders worry about deploying systems they cannot explain, secure, or maintain. They worry about accelerating technical debt. They worry about becoming dependent on opaque systems that resist accountability.

In practice, AI is far more effective when it removes repetitive operational burden rather than replacing human judgment. When embedded into well-designed DevOps workflows, AI frees engineers to focus on architecture, problem-solving, and strategic decisions.

Without that structure, AI simply accelerates chaos.

Automation with context, not automation for optics

A critical distinction between successful and failed AI initiatives lies in how automation is approached. Unsuccessful teams automate for visibility. Successful teams automate for leverage.

Context-aware automation understands dependencies, security constraints, historical behavior, and business priorities. It knows not only what action to take, but when and under which constraints.

DevOps supplies that context by connecting AI systems to logs, metrics, deployment histories, and compliance rules. Every automated action becomes traceable, auditable, and reversible.

Examples include scaling actions tied to cost thresholds, deployments constrained by security posture, and automated remediation that respects change-management policies.

Turning AI from experimental cost into business value

Another reason AI pilots struggle is economic uncertainty. Many initiatives launch with vague promises of efficiency but lack a clear path to measurable return. Infrastructure costs rise silently. Usage becomes unpredictable. Finance teams lose confidence.

DevOps introduces financial clarity. By coupling automation with cost observability and governance, teams can correlate AI usage with reduced manual effort, improved reliability, and lower operational overhead.

This does not guarantee ROI by default but it creates the conditions to measure it consistently. In organizations where DevOps and AI mature together, AI stops being an experiment and becomes a value driver.

Why DevOps remains essential regardless of the AI cycle

Whether the AI market continues to accelerate or experiences a correction, DevOps remains foundational. If AI adoption grows, infrastructure, security, and automation demands grow with it. AI cannot reach production without operational support.

If the market slows, organizations must stabilize, refactor, or dismantle poorly implemented systems. That work also depends on DevOps expertise. In both futures, DevOps is not optional. It is the stabilizing force that keeps systems reliable, adaptable, and defensible.

From pilots to platforms

In this context, pilots are isolated proofs of concept. Platforms are AI capabilities designed to be operated, governed, and evolved over time. Organizations that succeed with AI stop asking whether a pilot “works” and start asking whether a system can be operated safely, integrated cleanly, and sustained long-term.

DevOps drives this shift in mindset. It favors deliberate progress, incremental automation, and long-term resilience over short-term spectacle.

Final reflection

AI does not fail because it promises too much. It fails because it is deployed without structure. DevOps anchors AI in reality by embedding it into systems that can be observed, controlled, and improved over time.

The organizations that succeed are not chasing hype. They are building operational foundations that allow AI to deliver value safely, repeatedly, and with accountability, one well-governed workflow at a time.

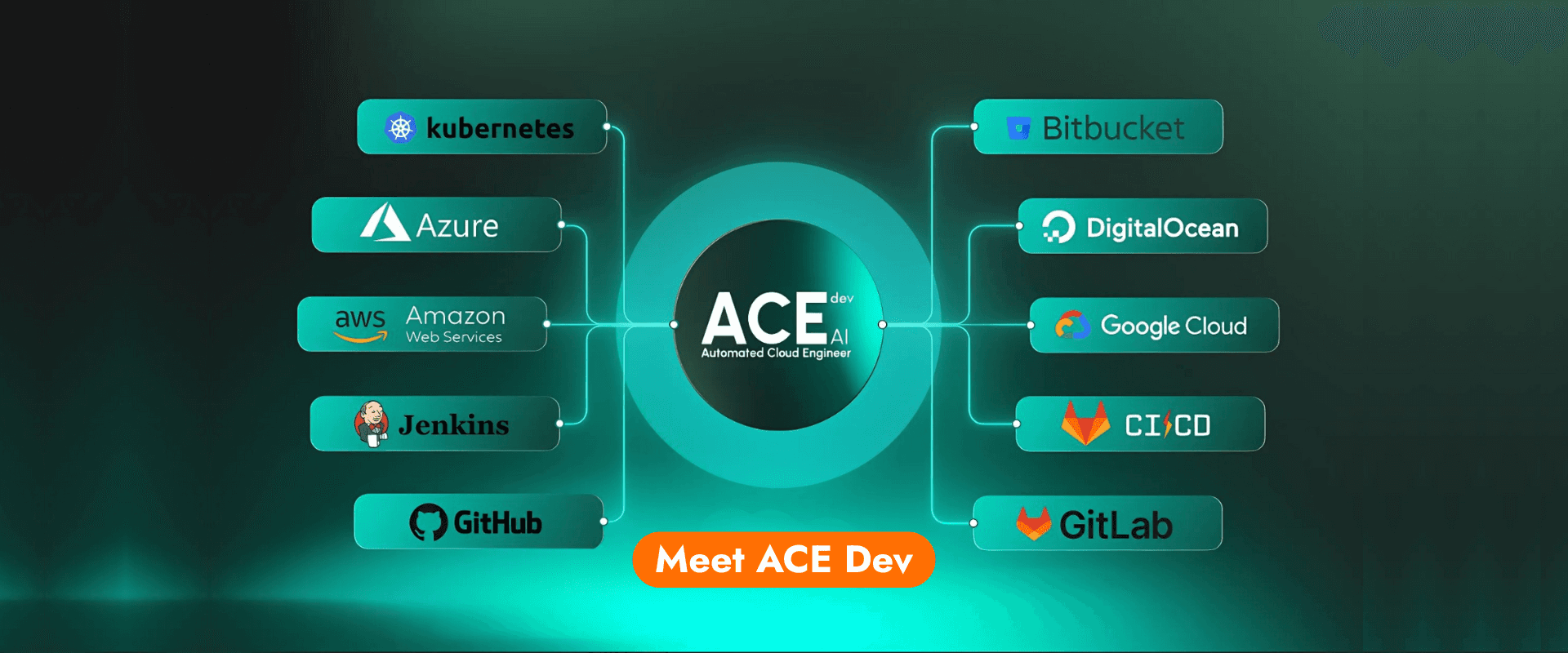

EZOps Cloud delivers secure and efficient Cloud and DevOps solutions worldwide, backed by a proven track record and a team of real experts dedicated to your growth, making us a top choice in the field.

EZOps Cloud: Cloud and DevOps merging expertise and innovation